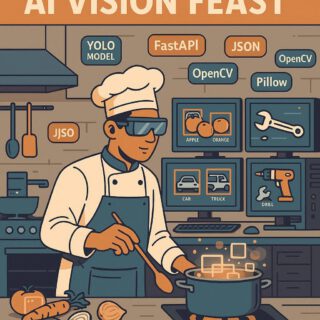

Main Dish (Idea):

This recipe creates an automated object detection system. It takes a folder of images as input, processes them using a pre-trained YOLO model served via a FastAPI endpoint, and then outputs the bounding boxes, labels, and confidence scores for each detected object, along with showing the image with the boudning boxes.

Ingredients (Concepts & Components):

- Freshly captured frames: Images from a folder (

framesdirectory). These are the visual input for our dish. - FastAPI Marinade: A FastAPI application to create an API endpoint, serving as the communication layer.

- YOLO Reduction: A pre-trained YOLO model (

yolo11s.pt) acts as the primary “flavoring” – the object detection algorithm. - Image Processing Glaze: PIL (Pillow) and NumPy are used to convert and prepare the images for the YOLO model.

- OpenCv Garnish: Displays the image with bounding boxes.

- Requests Digest: Used for sending HTTP requests to the API endpoint.

- JSON Reduction: JSON for structuring and returning the processed detection data.

Cooking Process (How It Works):

-

Frame Harvesting:

- Gather all the images from the designated

framesfolder. This is our initial mise en place.

- Gather all the images from the designated

-

API Infusion (Backend – FastAPI):

- The FastAPI app starts, loading the

yolo11s.ptmodel, which preps the object-detecting sauce. - It defines a

/predictPOST endpoint, ready to receive image ingredients. - Upon receiving an image:

- The image (in bytes) is read and converted into a NumPy array.

- The YOLO model then processes the image, identifying objects and providing bounding boxes, labels, and confidence scores.

- The results are formatted into a JSON response with a list of

"detected_objects".

- The FastAPI app starts, loading the

-

Image Relay (Frontend – Image Sending):

- The

get_image_filesfunction scans the designated folder (frames). - Loop through each image file found.

- For each image:

- Image Transfer: The image is sent as a file to the

/predictendpoint of the FastAPI server, starting the detection process. - Data Receiving and Decoding: Receives the JSON response from the API, containing the list of detected objects with their bounding boxes, labels, and confidence scores.

- Bounding Box Display: Show the image with bounding boxes

- Result Printing: Displaying the result of objects detected.

- Image Transfer: The image is sent as a file to the

- The

Serving Suggestion (Outcome):

This “AI Vision Feast” delivers a system that automatically processes images, identifies objects within them, and provides a structured JSON output detailing the object locations, labels, and confidence. It allows for automated understanding of image content, making it useful for a wide range of applications, from surveillance and robotics to image analysis and content moderation, all from a simple folder of images.