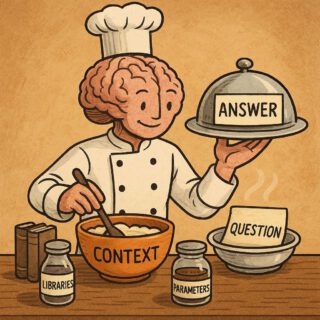

1. Main Dish (Idea)

A self-contained, conversational question-answering system. You provide a question, and the system serves up a relevant, informative answer, utilizing the knowledge embedded within a large language model.

2. Ingredients (Concepts & Components)

- The Brain (Large Language Model – Mistral-7b-instruct-v0.1.Q6_K.gguf): The core intelligence, a pre-trained language model with a vast understanding of language, facts, and relationships, stored as a

.gguffile (a specific format for model weights). - The Chef’s Knife (Llama-cpp Library): A library specifically designed to load and run Llama based models efficiently, acting as the interface to the large language model.

- The Prompt (Question): The input provided to the system – the query to which an answer is desired, formatted as an instruction.

- The Oven Controls (Parameters): Configuration settings such as

max_tokens(limiting the output length),temperature(controlling randomness/creativity),top_p(influencing output diversity), andrepeat_penalty(reducing repetition in answers) that fine-tune how the LLM generates a response. - Serving Dish (Output): The answer, generated by the LLM, in a formatted text form.

3. Cooking Process (How It Works)

- Preparation (Loading the Model): First, the

Llamaobject (the chef’s knife) loads the Mistral model from the.gguffile (the brain). This is a one-time operation at the start, essentially “waking up” the AI. - Seasoning (Prompting): The user’s question, the prompt, is carefully written and given to the LLM. It’s like adding spices to a dish – the quality of the prompt affects the quality of the answer.

- Baking (Inference): The

Llamaobject processes the prompt. The model, using its internal neural network, analyzes the question, identifies relevant information, and formulates an answer. The oven controls (parameters) are adjusted to control the response’s characteristics. - Plating (Output): The model’s answer is formatted and returned as text, ready to be served.

- Timing (Performance Measurement): The elapsed time for the entire process, is also measured.

4. Serving Suggestion (Outcome)

A system capable of providing answers to a variety of questions. It’s designed for simple question answering, able to respond in a coherent and informative manner, drawing on the knowledge it was trained on.